Here’s a scary thought: as an ecommerce business, your entire bottom line can come down to some truly miniscule website variables. Did you know that, when page load time increases from just 1 second to 3 seconds, bounce rate increases by 32%? (Via Google/SOASTA 2017) That’s a third of potential customers, gone in those extra 2 seconds.

Page load time, of course, is an easily measurable example of just how much one tiny variable can affect long-term business goals in the ecommerce sphere. But what about all the other little elements and behaviors that make an online shopping experience successful?

Luckily, those pieces are also measurable! A/B testing is the darling of conversion optimization and digital marketers for a reason: it allows you to isolate those little elements of customer experience and discover how they actually affect your page’s/site’s performance. But, in order to meet your conversion goal (and bottom line), you need to know where to start and how to test!

Outlining Parameters

First, in order to run a test that delivers actionable results, you need to set clear parameters. When A/B testing in ecommerce, this means establishing a hypothesis and isolating a variable. Is it tempting to test multiple elements at once? Absolutely. But it won’t expedite the results you need to make conversion-rate-boosting changes.

Even seemingly small adjustments — like changing the positioning of a CTA button — can have significant impact, so be wary of any unintentional changes to control elements while you’re testing.

It’s critical to go in with a hypothesis. For example, you might ask “if users are clicking on other page elements more frequently than the CTA button, then I may need to reposition/draw more attention/reword it.”

This way, your results aren’t just numbers: they’re answers to a question that leave you with an actionable plan to test.

Testing Tips

For the uninitiated, ecommerce A/B testing (or split testing) is a way to compare two versions of a variable to determine which one performs best. Some common User Experience elements to test are things like headers, product images, content layouts, pricing, and even button colors. The performance of these elements is typically measured with testing tools like Google Analytics.

If variable isolation is the name of the game, though, then a couple testing conditions also need to be taken into account. When running a test, make sure to:

- Test for a minimum of 7 days

- Weekend traffic versus weekday traffic? Super variable. Make sure you’re getting at least some data from each so you’re not missing important context

- Determine statistical significance

- I.e. figuring out “what’s the threshold for the result of this testing idea to be meaningful?

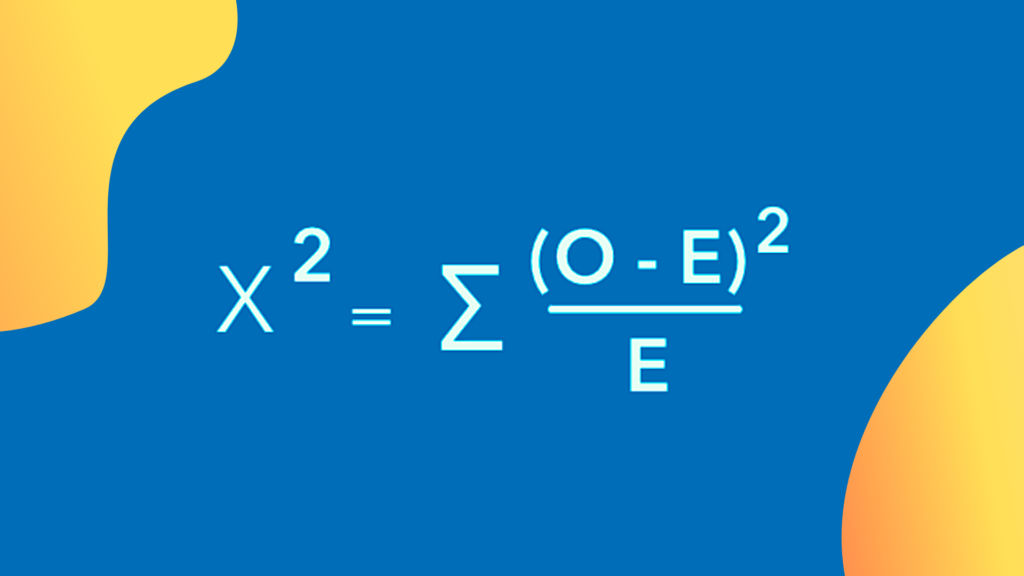

An increase in clicks/sign-ups/any other performance metric may not tell the whole story up front. Increases are very often a good sign, but finding the point at which a result will become statistically significant is a critical benchmark to interpret your results against. In simpler terms, you want to make sure your result isn’t a fluke, and to do so, there’s a bit of math involved.

The good news? There’s a plethora of free, automatic statistical significance calculators online to do the hard part for you. Just plug in your data to get your statistical analysis — and discover which version of your variable produces the most intuitive, navigable (read: profitable) version of your site/page.

Interpreting Results — and Putting Them to Work

First and foremost, you want to look at the metric you defined in your hypothesis. If the hypothesis was “placing the CTA button higher on the page will lead to more clicks,” then you’ll of course go straight to comparing the number of clicks on the CTA during the test period versus the number of clicks on the CTA in a normal week/month/equivalent period of time to the test.

Again, while a positive trend is usually good, you’ll need to determine statistical significance before drawing conclusions. Discovered a statistically significant result that suggests a higher click rate when the button is moved up? Great. That’s a proven hypothesis that you can use to guide the rest of your layout strategy. (Adding more action-benefit phrases to your above-the-fold content, adjusting your headline wording, and so on.)

But what if your hypothesis seems wrong, or inconclusive? It’s not necessarily the end of the road. Especially if your testing tools deliver a result that seems contrary to conventional conversion rate optimization wisdom, it never hurts to look back at the testing process and dig into your layout, content, and visuals to ensure there weren’t any errant variables skewing the results. You’ll either discover something that you previously missed, or learn something about your target audience’s behavior, so it’s really a win-win.

Of course, sometimes your page’s performance results will run contrary to that conventional CRO wisdom, and that may just be a function of your niche/product. Also a valuable insight! You might ask yourself, “what about this product or industry changes the way people shop and gather information?” which in turn may provide more hypotheses to test.

The real secret? Well-founded hypotheses are the key to uncovering effective performance insights. A/B testing for your ecommerce site may leave you with clear, actionable results, or they may leave you with more sharply honed questions — but what they’ll never leave you with is a 100% perfectly optimized page or site.

There’s no perfect when it comes to ecommerce stores and conversion because behaviors, interests, and shopping patterns are constantly changing. Asking increasingly focused questions, isolating variables, and user testing to uncover statistically significant results about site performance, though, are the most effective method to keep improving.

And in a sphere where there’s no such thing as 100% optimization, rapid, data-supported improvement is really the key to continually driving your business objectives forward.

Want to drive up your conversions by taking your testing strategy to the next level? Give us a call!